I study methods at the interface of machine learning and control, including reinforcement learning, imitation learning, model predictive control and dynamic mode decomposition. My main interest is in approaches that build on mathematical structure and optimization principles, enabling both interpretability and efficiency in decision-making and control. My research aims to develop interpretable and theoretically grounded methods for decision-making in partially observable, real-world systems.

I am a Ph.D. student in Computer Science at Cornell University, working with Sarah Dean. Before joining Cornell, I was a research scientist at the Korea Institute of Science and Technology (KIST). I received my M.S. from Korea University and my B.S. from the University of Seoul.

📖 Educations

- Ph.D. Student in Computer Science, Aug. 2024 - Present

- Cornell University

- Advisor: Sarah Dean

- M.S. in Electrical and Computer Engineering, Mar. 2021 - Aug. 2023

- Major : Control, Robotics and Systems. Korea University (GPA: 4.39/4.5)

- Research Scholarship from Hyundai Motor Group, Mar. 2021 - Dec. 2022,

- B.S. in Electrical and Computer Engineering, Mar. 2017 - Feb. 2021

- University of Seoul. (GPA: 4.0/4.5)

- Research Scholarship from Hyundai Motor Group, Sep. 2019 - Dec. 2020

📝 Publications

Sparse-to-Field Reconstruction via Stochastic Neural Dynamic Mode Decomposition

- Proposes a probabilistic DMD–Neural ODE model that reconstructs continuous spatiotemporal fields from only 10% sparse observations with uncertainty quantification.

- Recovers interpretable Koopman modes and continuous-time eigenvalues, learns distributions over dynamics across realizations.

- Submitted to L4DC 2026, project website

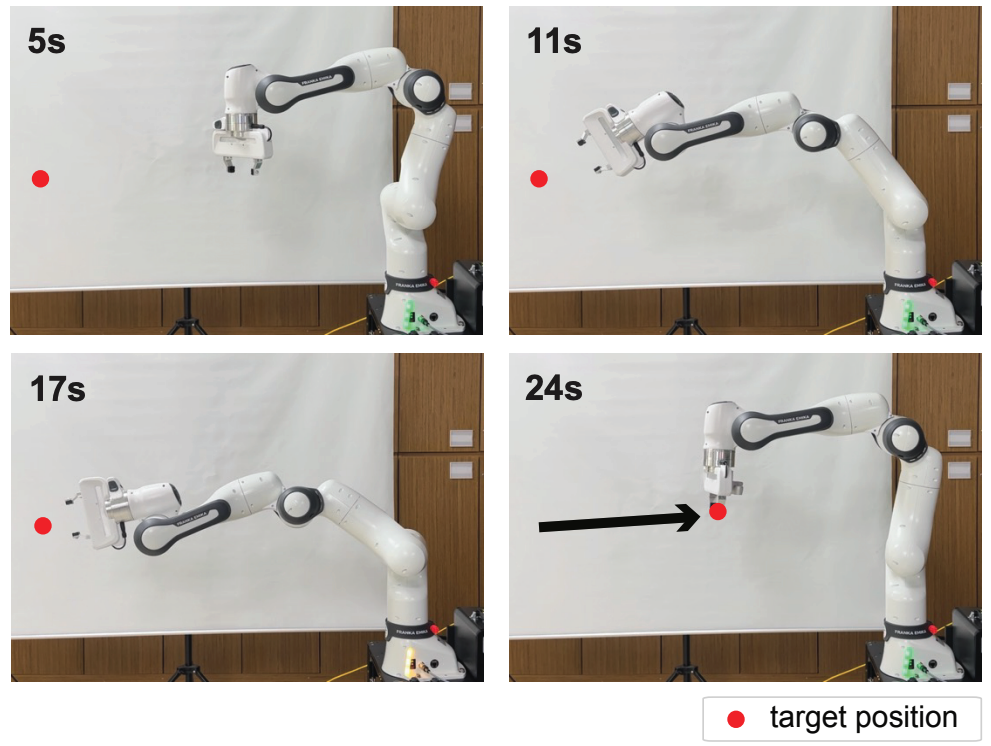

- Real-time MPPI controller that achieves high-frequency task-space manipulation by redesigning sampling and horizon structures.

- Establish that constant-perturbation rollouts remain theoretically compatible with MPPI weighting.

- Published in the Proceedings of IEEE Transactions on Robotics, supplementary video

Distilling Realizable Students from Unrealizable Teachers

- Policy distillation under asymmetric imitation learning setting.

- Propose two new IL/RL algorithms robust to state aliasing.

- Published in the Proceedings of IROS 2025, project website

Subspace-wise Hybrid RL for Articulated Object Manipulation

- Develop skills for operating equipment (e.g., valve, switch, gear lever…) at industrial sites with a manipulator.

- Learn skills while minimizing human-engineered features using reinforcement learning.

- Submitted to ICRA 2026

Whole-body motion planning of dual-arm mobile manipulator for compensating for door reaction force

- Address challenges of door traversal motion planning

- Unified framework for door traversal, from approaching, opening, passing through, and closing the door with dual-armed mobile manipulator

- Decision making for optimal contact point planning with RL.

- Presented in ICRA 2025 workshop

- Challenge to skewed sub-goal distribution for goal-conditioned RL controller.

- Enable adaptive sub-goal planning and efficient reward learning via MPC-synchronized rewards.

- Published in the Proceedings of Applied Sciences

Reinforcement Learning for Autonomous Vehicle using MPC in Highway Situation.

- Addresses the challenge of reward shaping for continuous RL controllers by using MPC reference.

- Published in the Proceedings of ICEIC 2022

🎖 Honors and Awards

- 2025 IROS-SDC Travel Award.

- 2024 Student Travel Grant, ICRA 2024 (MOMA.v2 Workshop).

- Sep. 2018 - Dec. 2022, Full Scholarship for Selected Research Student, Hyundai Motor Company.

- May 2022, 10th F1TENTH Autonomous Racing Grand Prix, 3rd Place, ICRA 2022.

- Spring 2018, Scholarship for Excellent Achievement, University Of Seoul.

- Jul. 2018, 2018 Intelligent Model Car Competition, 3rd Place, Hanyang University.

- Jul. 2017, 14th Microrobot Competition, Special Award for Women Engineer, Dankook University.

💻 Work Experience

- Jan. 2023 - 2024, Korea Institute of Science and Technology, Republic of Korea.

- Jul. 2019, Hyundai Motor Group, Republic of Korea.